PARROT (ICML 20) 论文阅读

An Imitation Learning Approach for Cache Replacement

Abstract

we propose an imitation learning approach (PARROT) to automatically learn cache access patterns by leveraging Belady’s, an oracle policy that computes the optimal eviction decision given the future cache accesses.

Introduction

- We propose cache replacement as a challenging new IL/RL (reinforcement learning) benchmark involving dynamically changing action spaces, delayed rewards, and significant real-world impact. To that end, we release an associated Gym environment.

Cache Preliminaries

太简单了,略

Casting Cache Replacement as Imitation Learning

把 cache 的概念映射了一堆,要是重要再补吧

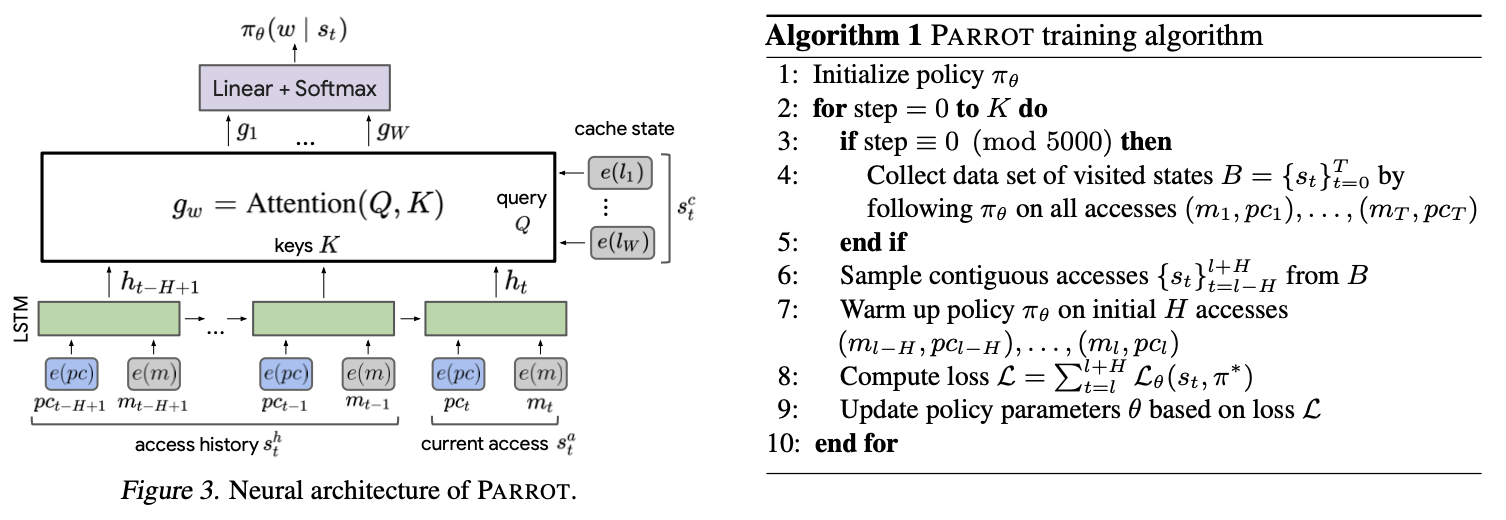

PARROT: Learning to Imitate Belady’s

因为不太懂,所以直接丢结论:

关于算法,这里讨论了一个 compounding errors: At test time, since PARROT learns an imperfect approximation of the oracle policy, it will eventually make a mistake and evict a suboptimal cache line. This leads to cache states that are different from those seen during training, which the learned policy has not trained on, leading to further mistakes.

Notably, we can compute our oracle policy (Belady’s) at any state during training, as long as the future accesses are known. This differs from many IL tasks, where querying the expert is expensive and limited.

Experiments

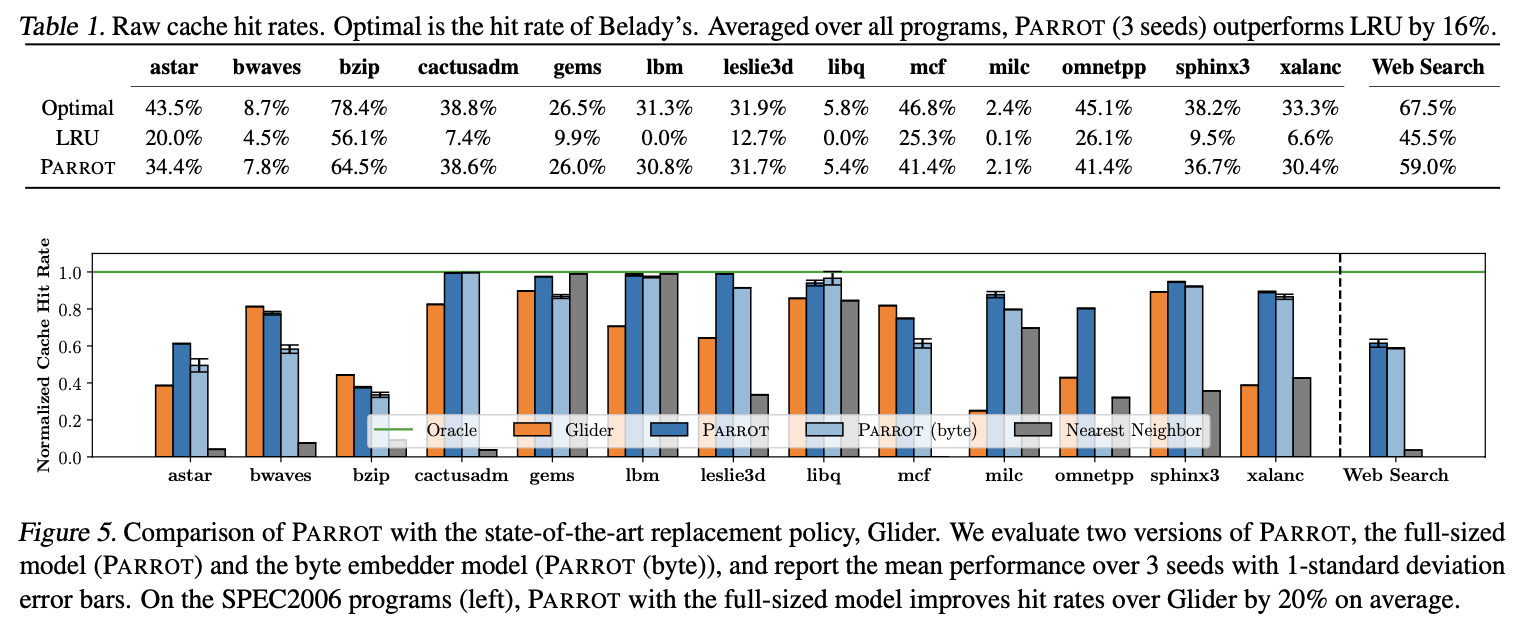

注意:normalized hit rate = \(\frac{r-r_{LRU}}{r_{opt}-r_{LRU}}\)

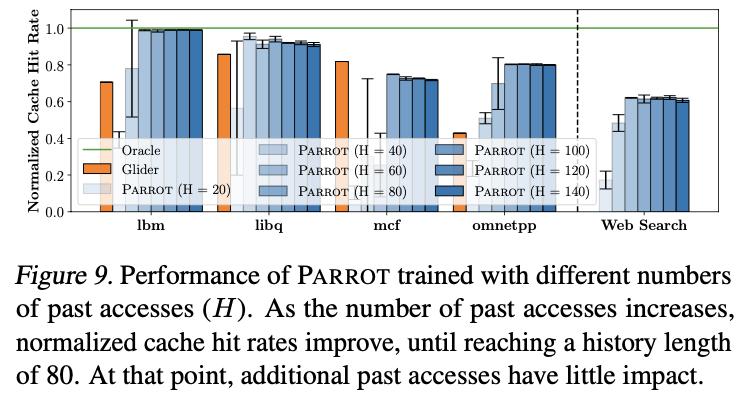

其实不是很明白两个 model 的区别到底在哪:”We observe that the past accesses become an increasingly better predictor of the future as the number past accesses increase, until about 80. After that point, more past information doesn’t appear to help approximate Belady’s. Interestingly, Glider experiences a similar saturation in improvement from additional past accesses, but at around 30 past accesses. This suggests that learning a replacement policy end-to-end with PARROT can effectively leverage more past information than simply predicting whether a cache line is cache-friendly or cache-averse.”

Related Work

略

Conclusion and Future Directions

“Software caches may be an especially promising area for applying our approach, as they tolerate higher latency in the replacement policy and implementing more complex replacement policies is easier in software.”

评论

本文自己提到,当前的 overhead 对于 hardware cache 不太友好,比较有前景的还是 software cache;但既然如此,为什么又在使用 cpu traces/benchmark,以及对比的是针对 cpu cache 的其他工作(比如 Glider)呢?在我看来是选择了不对应的 workload 以及更容易的 baseline。更容易是因为 cpu cache 上的 ML 工作总体倾向于轻量,也因此潜在地牺牲了 accuracy。当然,本文使用的如果是最重量的 Glider 作为 baseline 也不算太容易。