TMO (ASPLOS 22) 论文阅读

TMO: Transparent Memory Offloading in Datacenters

资源 elasticity (memory/OS)

Abstract

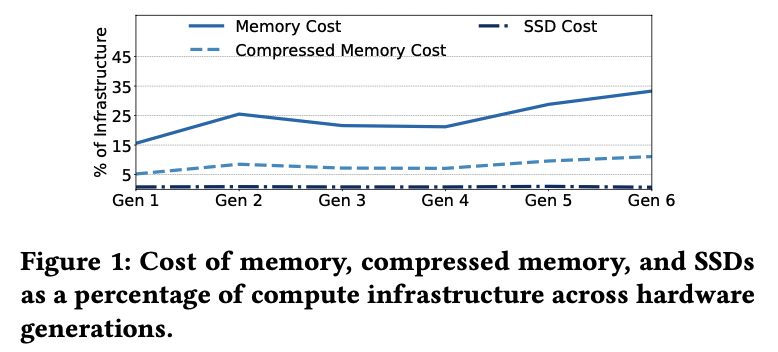

趋势:compressed memory/NVMe SSD offer higher capacity and lower cost & power

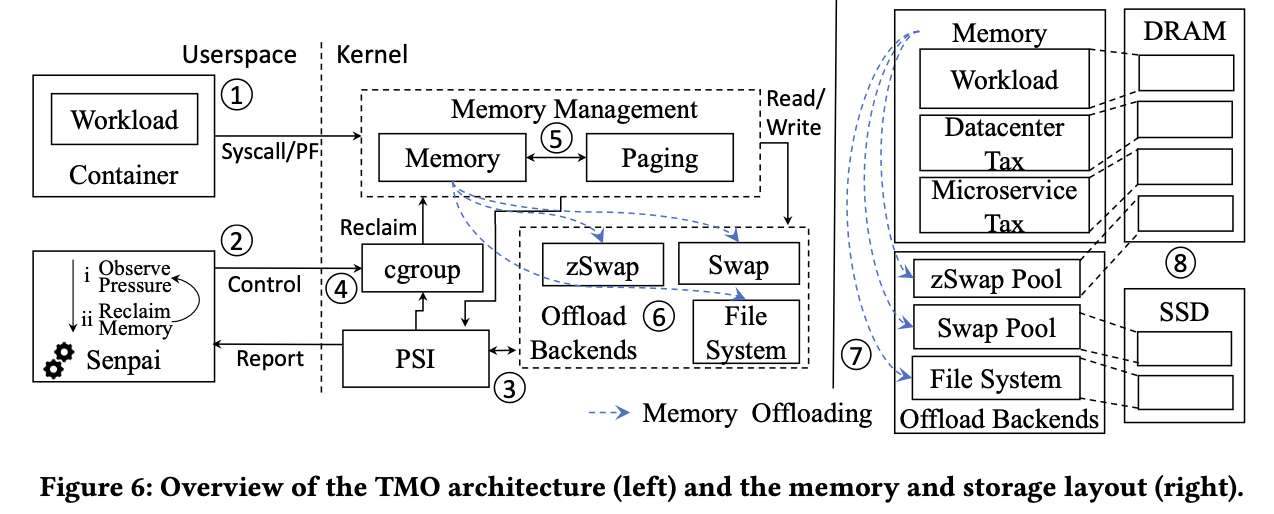

TMO: transparent memory offloading solution for heterogeneous datacenter environments via kernel

Mark 一些细节:TMO holistically identifies offloading opportunities from not only the application containers but also the sidecar containers that provide infrastructure-level functions. To maximize memory savings, TMO targets both anonymous memory and file cache, and balances the swap-in rate of anonymous memory and the reload rate of file pages that were recently evicted from the file cache.

效果惊人:TMO has been running in production for more than a year, and has saved between 20-32% of the total memory across millions of servers in our large datacenter fleet. We have successfully upstreamed TMO into the Linux kernel.

Introduction

在这里详细介绍了已有的 g-swap 的不足:

- 只支持 offload 到 compressed memory(不能 NVMe SSDs/NVM),而且也不是什么都适合 compressed memory(反例:machine learning models with quantized byte-encoded values)

- empirical: relies on extensive offline application profiling, and sets a static target page-promotion rate(反例:有实验发现 high promotion rate improves performance)

关注:

- how much memory to offload

- a new kernel mechanism called Pressure Stall Information (PSI), which directly measures in realtime the lost work due to resource shortage across CPU, memory, and I/O (per-process and per-container)

- A userspace agent called Senpai uses the PSI metrics to dynamically decide (together with hardware heterogeneity in datacenters)

- what memory to offload

- 关于 file cache 和 swap-backed anonymous memory 当前更愿意回收 file cache(不懂),TMO 会更均匀地对待这两者

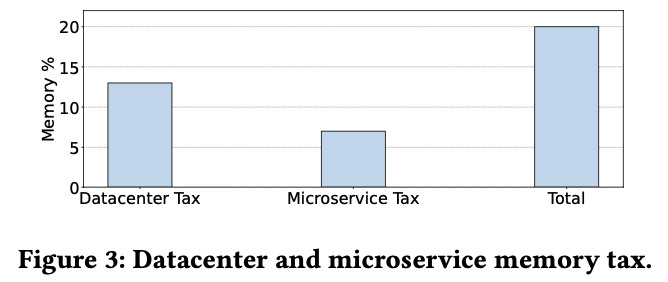

- TMO 也考虑了 sidecar containers offloading (20-32% 的总收益里有 13% 来自这个)

Questions:

- file cache 和 swap-backed anonymous memory 这两者之间的平衡原来是如何做的?

MEMORY OFFLOADING OPPORTUNITIES AND CHALLENGES IN DATACENTERS

Memory and SSD cost Trends

Questions:

- 这张图有点懵。这个相对开销不是取决于 hardware 的绝对数量吗?memory 花钱多不一定说明不值得,也许只是部署的数量多?当前 SSD 花钱少不意味着提高这部分就值得?

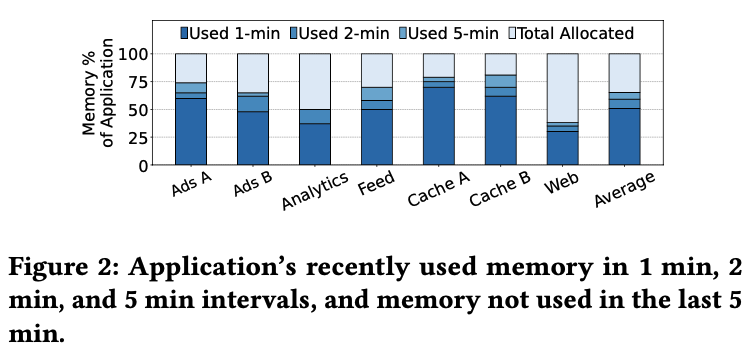

Cold Memory as Offloading Opportunity

The memory coldness of applications vary drastically, which emphasizes the importance of having an offloading method that is robust against application’s diverse memory behaviors.

Memory Tax

datacenter memory tax: the memory required for software packages, profiling, logging, and other supporting functions related to the deployment of applications in datacenters.

microservice memory tax: all the memory required by applications due to their disaggregation into microservices, e.g., to support routing and proxy, and it is applicable uniquely to microservice architectures.

The performance SLA for most of the memory tax is more relaxed than that of memory directly consumed by applications. -> good for offloading!

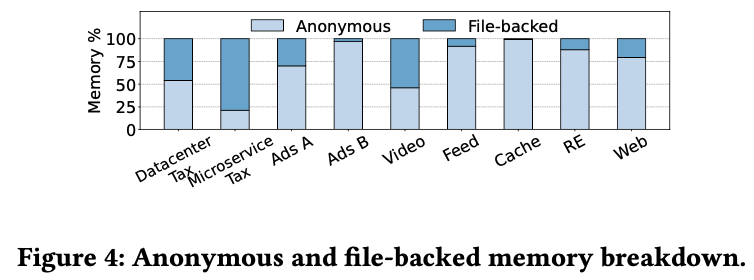

Anonymous and File-Backed Memory

Memory is separated into two main categories, anonymous memory and file-backed memory. Anonymous memory is allocated by applications and is not backed by a file or a device. File-backed memory represents allocated memory in relation to a file and is further stored in the kernel’s page cache.

可以看到 anonymous memory 占比挺高的。

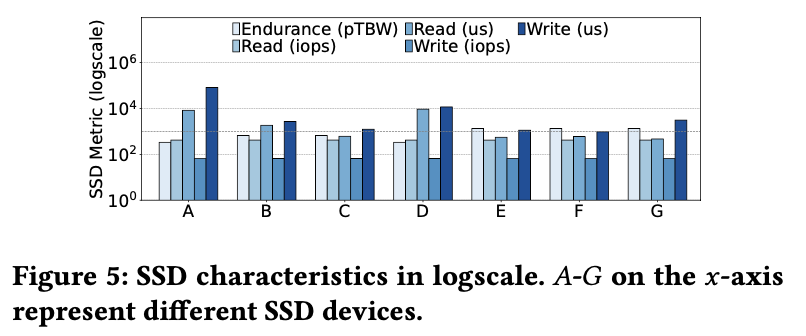

Hardware Heterogeneity of Offload Backend

TMO Design

Transparent Memory Offloading Architecture

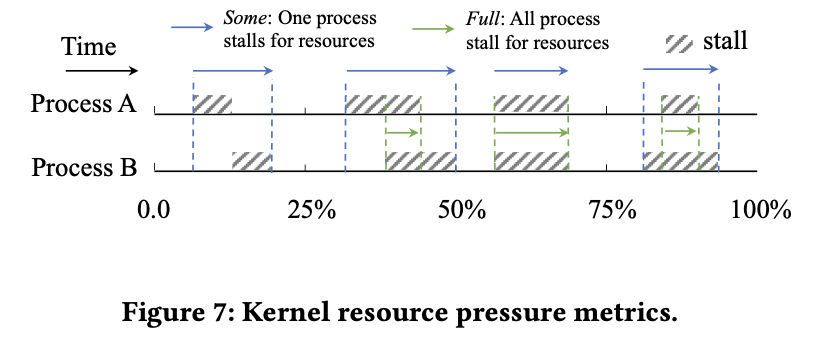

Defining Resource Pressure

原先用 fault rate 来衡量,但显然不是最优的:如果只是正在warmup,或者在异构的情况下,相同的 fault rate 可能有不同的情况。

在这里,PSI Some = 12.5% + 18.75% + 12.5% + 12.5%; Full = 0% + 6.25% + 12.5% + 6.25%.

PSI captures the impact of memory-access slowdown to an application (instead of resident set size, RSS, or promotion rate).

To track memory pressure, PSI records time spent on events that occur exclusively when there is a shortage of memory.

略

Determining Memory Requirements of Containers

Kernel Optimizations for Memory Offloading

In production, reclaim driven by Senpai consumes 0.05% of all CPU cycles, a negligible amount.

Historically, the kernel has been very conservative in using swap. This relegated swap to only be used as an emergency overflow for memory.(历史故事:原先都认为来自 application 的 anonymous memory 比较重要,file cache 里最冷的那些文件总归是比较久不会再用了的,但 anonymous memory 就不知道了,所以只有实在不行了才会用 swap 把 anonymous memory 回收了。)

TMO 的做法是根据 file cache 触发 reclaim 的情况来决定(如果 file cache reclaim 比较多,说明 file cache 最好不要缩小了,那就稍微委屈一下 anonymous memory)。

Reclaim 请参考 Better active/inactive list balancing。

Evaluation

(1) How much memory can TMO save? (2) How does TMO impact memory-bound applications? (3) Are PSI metrics more effective than the promotion-rate metric? (4) How to tune TMO’s configurable parameters? (5) Can TMO avoid SSD wear-out due to offloading writes?

Fleet-Wide Memory Savings

The performance metric is requests per second (RPS) with a predefined target tail latency. Each server automatically throttles its RPS in order to meet the tail latency.

后面都略了,感觉看下最后推荐的文章,再看论文+slides 非常好懂了。

Discussion

Production Deployment Experience

Limitations and Future Work

这个很有意思:这里 offload 到哪里是手动选择的,文章说 a more fundamental solution is for the kernel to manage a hierarchy of offload backends, e.g., automatically using zswap for warmer pages and using SSD for colder or less-compressible pages, as well as including NVM and CXL devices into the memory hierarchy in the future. The kernel reclaim algorithm should dynamically balance across these pools of memory. We are actively working on this architecture.

Hardware for Memory Offloading

Related Work

总结

- 推荐:一篇 写得比较详尽的 TMO 的介绍

- 推荐:内存回收 之 File page的 lru list算法原理

- 小启示:各领域的人除了可以做非常 hardcore 的 system 优化,也可以考虑考虑 auto-configuration