Paxos (PODC 07) 论文阅读

Paxos Made Live - An Engineering Perspective

Abstract

- describe our experience in building a fault-tolerant database using the Paxos consensus algorithm (non-trivial)

- describe selected algorithmic and engineering problems encountered, and the solutions we found for them

Introduction

Background

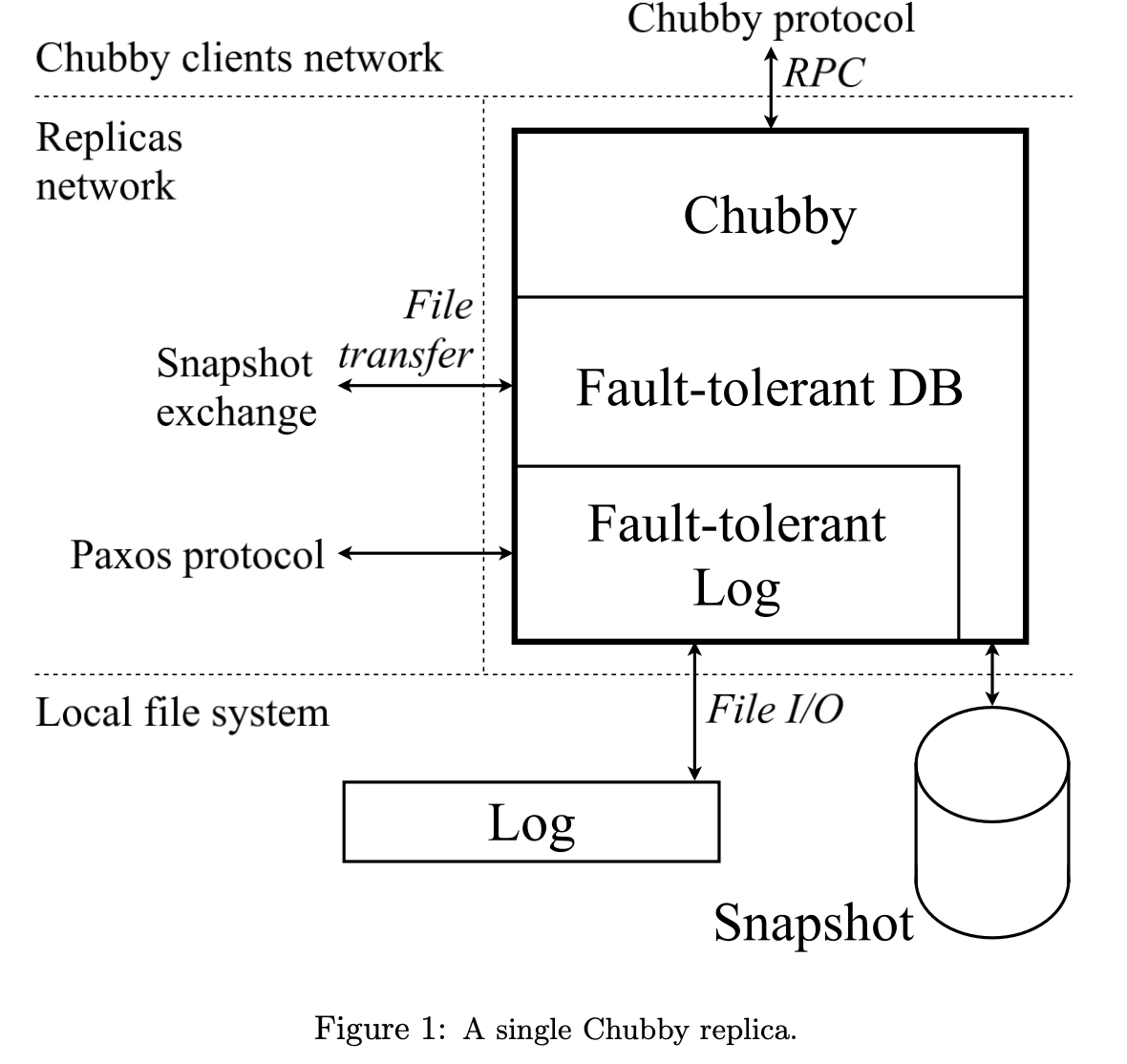

Chubby is a fault-tolerant system at Google that provides a distributed locking mechanism and stores small files.

Several Google systems – such as the Google Filesystem (GFS) and Bigtable – use Chubby for distributed coordination and to store a small amount of metadata. A typical Chubby cell consists of five replicas, running the same code, each running on a dedicated machine. Every Chubby object (e.g., a Chubby lock, or file) is stored as an entry in a database. It is this database that is replicated. At any one time, one of these replicas is considered to be the “master”.

Chubby clients (such as GFS and Bigtable) contact a Chubby cell for service. The master replica serves all Chubby requests. If a Chubby client contacts a replica that is not the master, the replica replies with the master’s network address. The Chubby client may then contact the master. If the master fails, a new master is automatically elected, which will then continue to serve traffic based on the contents of its local copy of the replicated database. Thus, the replicated database ensures continuity of Chubby state across master failover.

Chubby 很重要,相比原先直接拿一个第三方实现 (“3DB”),作者们决定自己做一个很确定正确性的系统。

Architecture outline

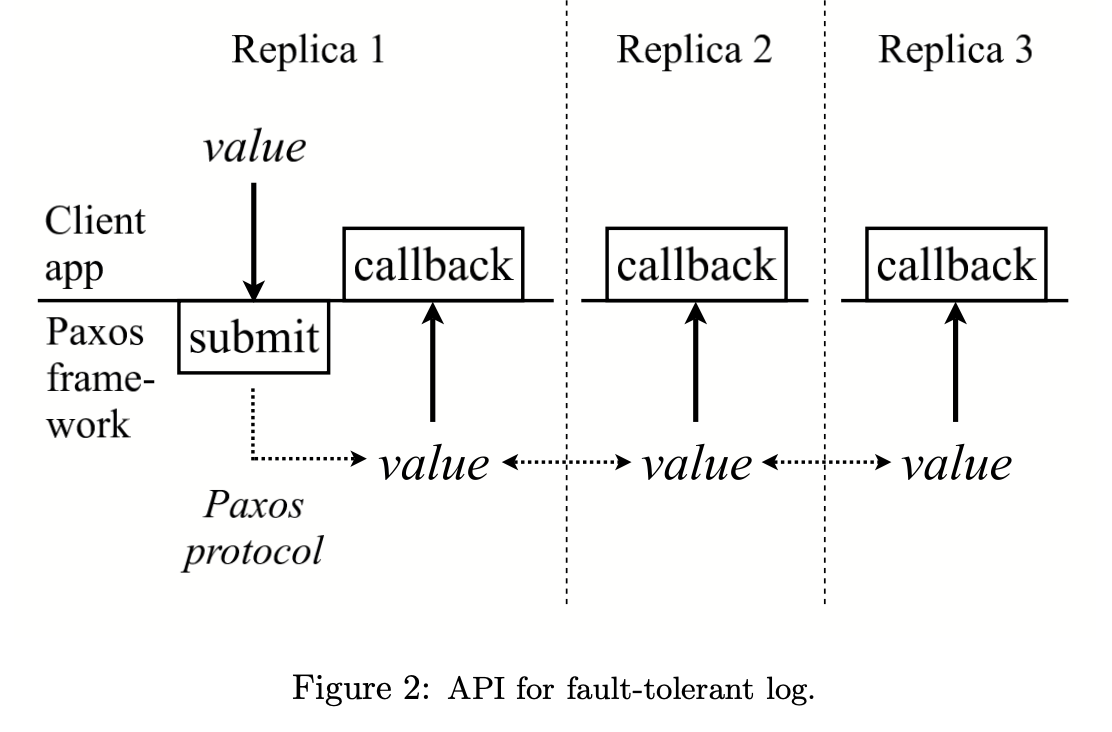

We devoted effort to designing clean interfaces separating the Paxos framework, the database, and Chubby. We did this partly for clarity while developing this system, but also with the intention of reusing the replicated log layer in other applications.

On Paxos

略

评论

剩下的看这篇吧